No. I do not have cancer. But in April and May and June of this year, I thought I might.

So that’s the answer to the question in my headline. I’ve been taking a break while I deal with the roller coaster of emotions that come with suspicious mammogram and biopsy results and then surgery. First, the story.

In April, I had an ordinary, run-of-the-mill mammogram. I’m what you call a non-compliant patient, and so I’ve only had one other mammogram in my life. Turns out both of these great experiences ended up with biopsies. My first feeling was to be totally pissed off. I’d had a biopsy before, and let me tell you, they are not fun. And since the first one showed nothing, I expected that this would be more of the same — an exceedingly uncomfortable and nerve-wracking experience that showed nothing.

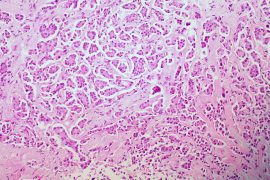

Except it didn’t. The biopsy showed “atypical” cells. This means I had something called Atypical Ductal Hyperplasia or ADH. This is not cancer. These atypical cells cannot even be called precancerous cells. My amazing surgeon explained: Research shows that women with ADH have an increased chance of those atypical cells becoming cancer. Here are the numbers:

- Women without ADH have about a 5 percent chance of getting breast cancer.

- Women with ADH have a 20 percent chance of getting breast cancer.

- And that means women with ADH have four times the chance of getting breast cancer.

For me, those numbers pointed to a very easy decision: to have the area with ADH removed. On July 5, I had a lumpectomy. Then I waited for the pathology results. I waited for 10 days.

Anyone who has gone through something similar knows the special hell these ten days were. I am not a particularly emotional person. And yet, these ten days were downright terrifying. And here’s why.

There was a 20 percent chance that the lumpectomy would reveal cancer. In other words, there was a slight chance that the biopsy missed any cancerous cells that were already there. Of course, that meant I had an 80 percent chance of no cancer at all.

After the surgery, I updated my friends and family. One physician friend emailed me back: “I hope you find some solace in those stats (ie the 80%).” I assured her that I did. (No lie at that point.) And she followed up with this:

“Glad to hear how you’re taking it. You are right about the stats. They are often very difficult for patients, because if there is a small chance of something, but a patient has it, that patient has 100% chance of having it, right? But we as physicians use stats all the time, especially in the office setting where you don’t have any and every diagnostic test at your fingertips, and with the cost– psychological and financial– to the patient: what is the chance that this patient with this headache and those symptoms has a brain tumor? What are the chances that this person’s chest pain is a heart attack and not indigestion? It is probability, given symptoms, age, and a slew of other factors, in combination with the implications of a given diagnosis.”

These numbers were supposed to ease my mind. Except feelings + stats + time = complete and utter freak out.

By day nine of my waiting period, I was a total wreck. I cried all day long. I wasn’t sure if I was going to be able to sleep. I was nervous as a long-tailed cat in a room full of rocking chairs.

Happy ending: I don’t have cancer. I know that not everyone gets that amazing news, and I am extremely grateful. I am being followed very closely, because my chances of getting breast cancer are still higher than most women’s. And I’m taking tamoxifen for the next five years, which reduces my chances by half. Those aren’t bad stats either.

I never thought that math was the be all end all, but I have often railed against misinterpreting numbers to incite fears and advocated for the use of statistics to ease worry. Still, feelings don’t always play well with math, I’ve found. When a person is worried — scared, even — a pretty percentage may not be comforting. And that’s okay, too. We all do the best we can with what we’ve got.

What’s your story with health and statistics? Has a percentage ever frightened you to the point of distraction or temporary insanity? Share your story here. You are not alone!